Pre-training vs Fine-Tuning vs In-Context Learning of Large

4.8 (165) In stock

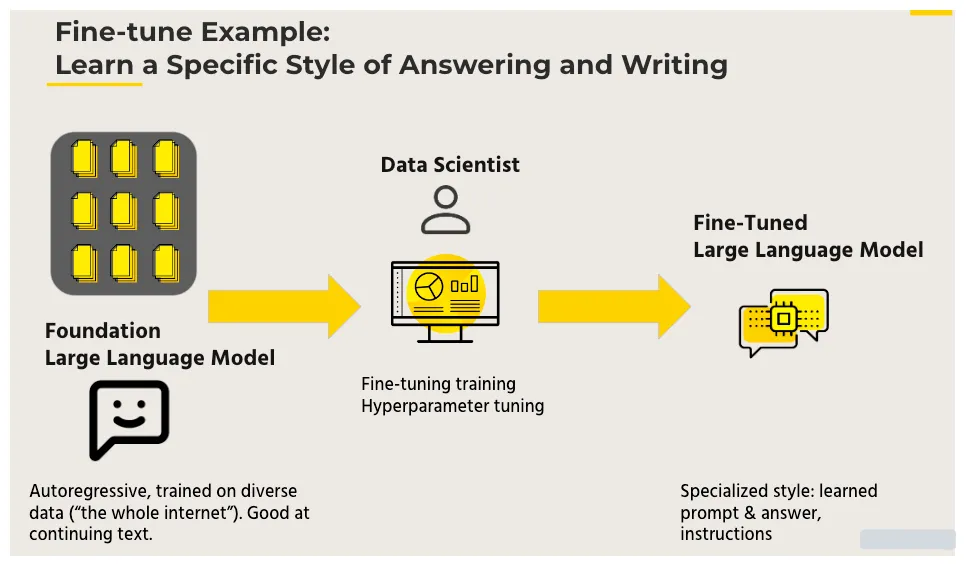

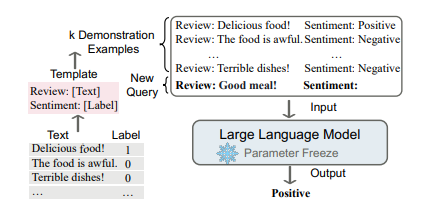

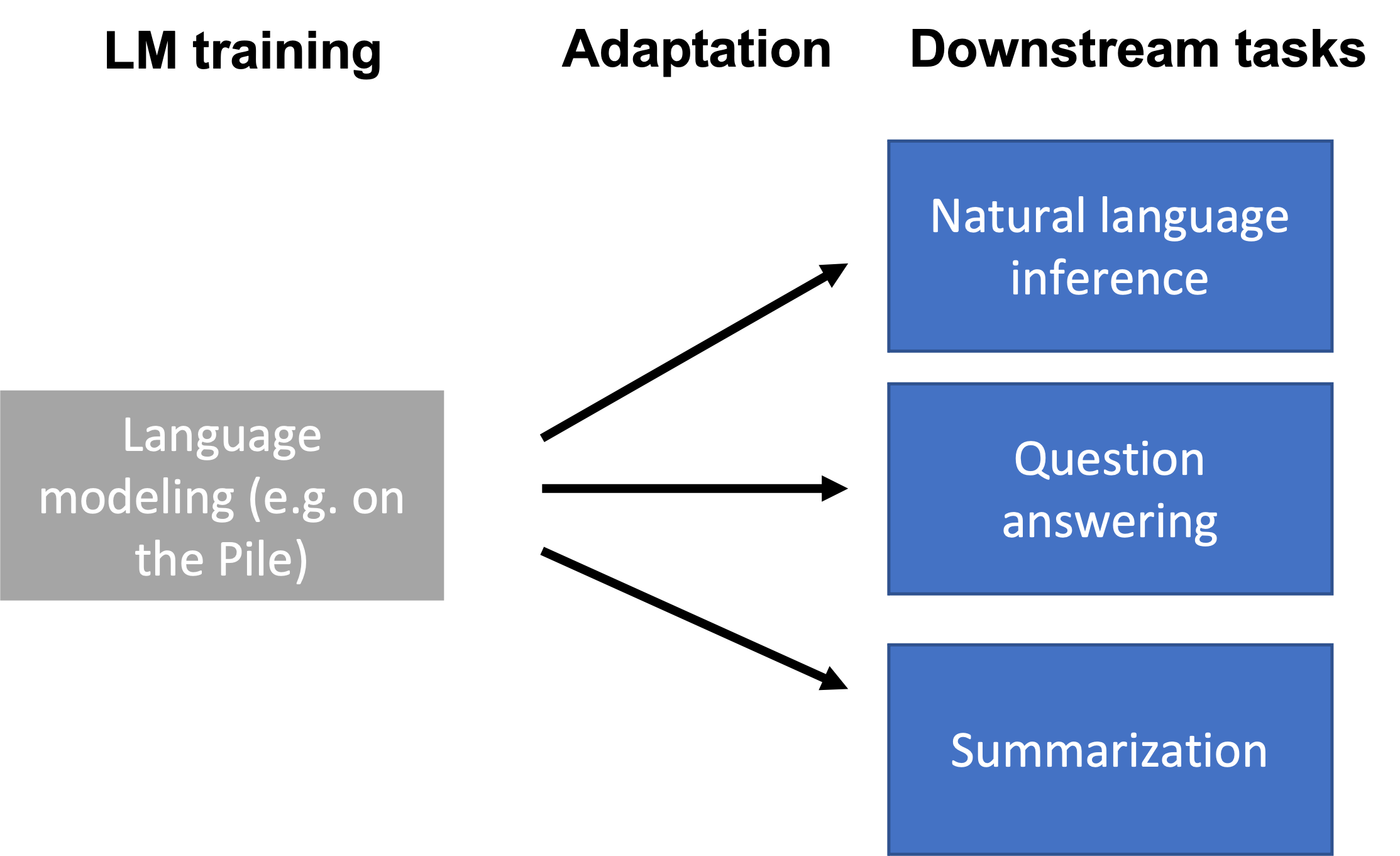

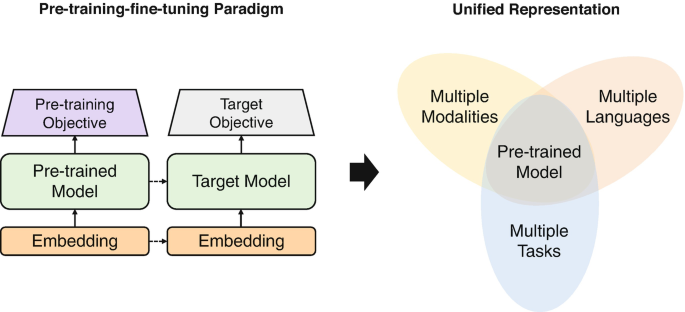

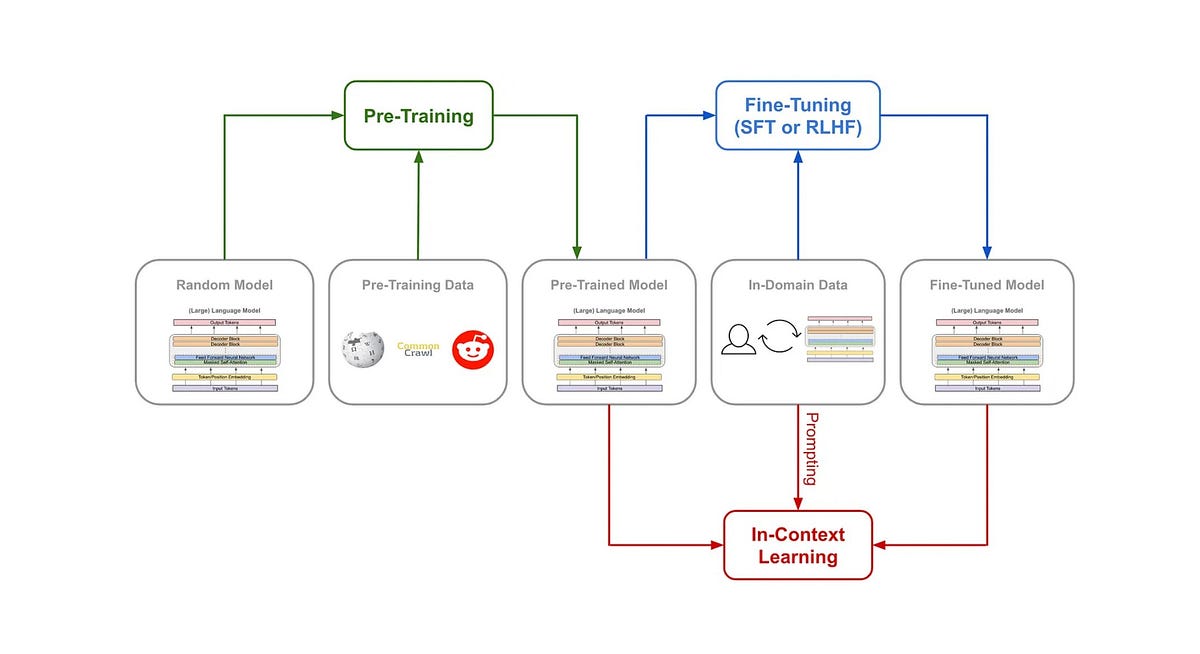

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

Everything You Need To Know About Fine Tuning of LLMs

Symbol tuning improves in-context learning in language models – Google Research Blog

In-Context Learning Approaches in Large Language Models, by Javaid Nabi

Adaptation

Pre-trained Models for Representation Learning

Empowering Language Models: Pre-training, Fine-Tuning, and In-Context Learning, by Bijit Ghosh

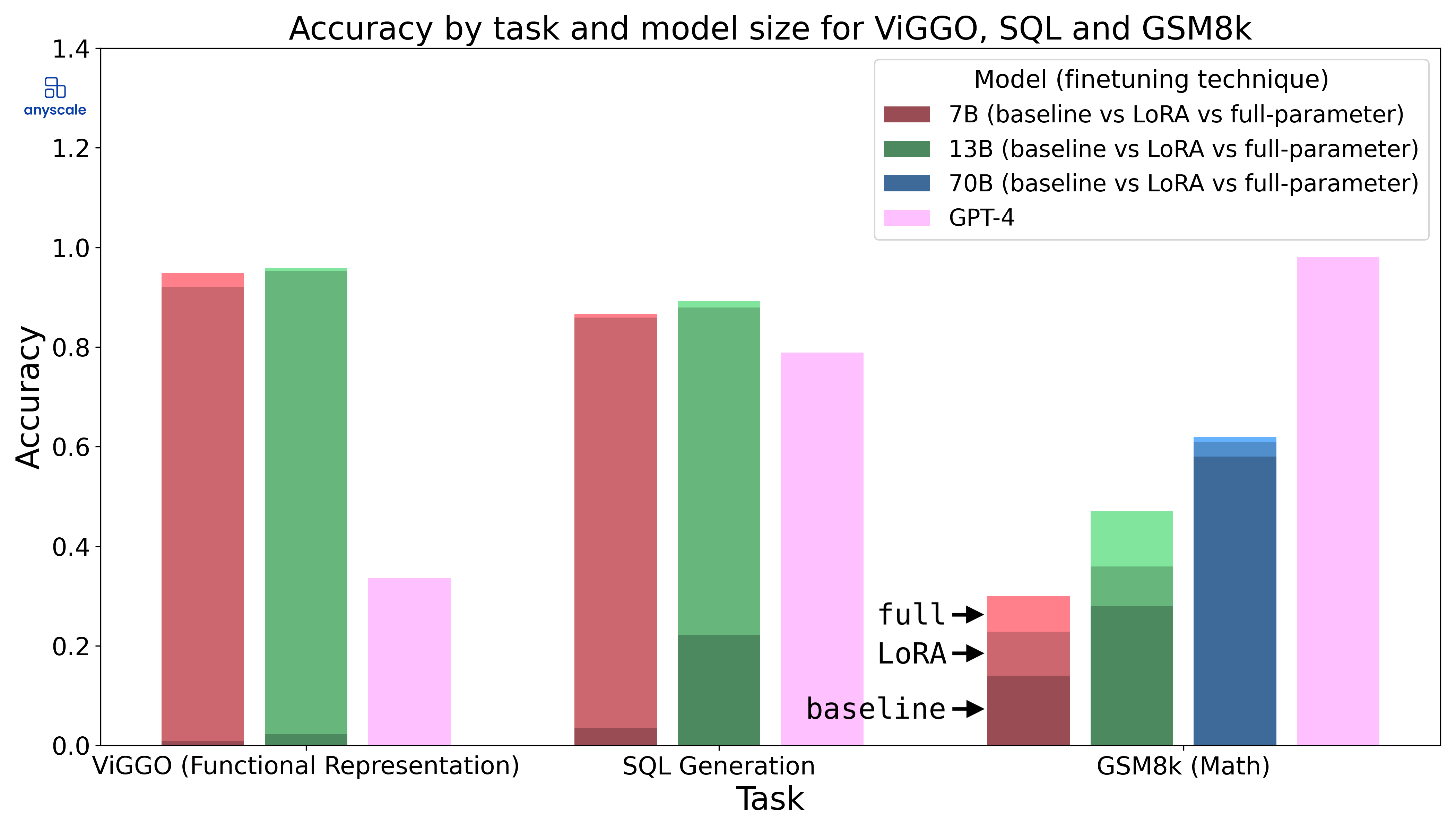

Fine-Tuning LLMs: In-Depth Analysis with LLAMA-2

In-Context Learning, In Context

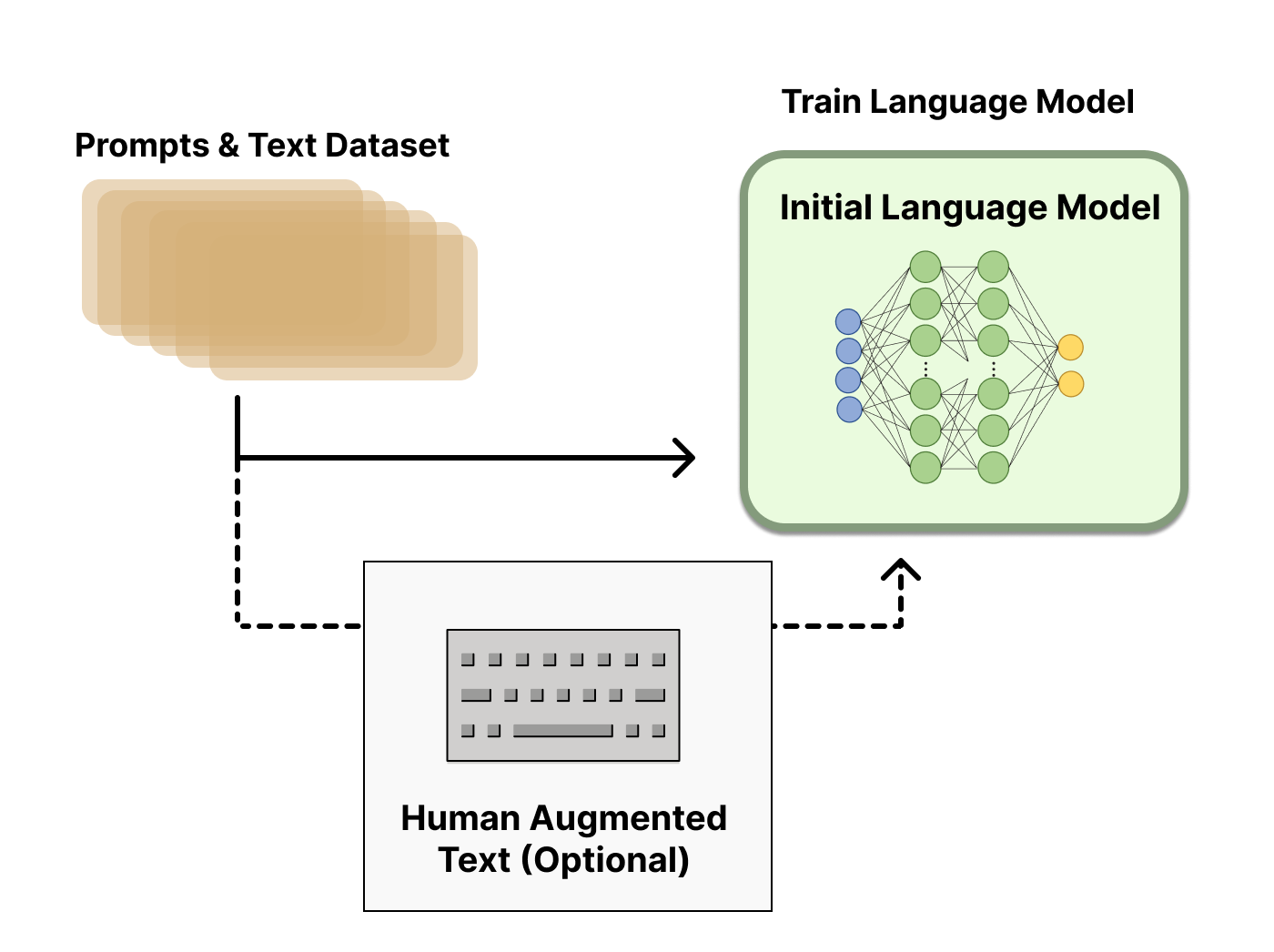

Illustrating Reinforcement Learning from Human Feedback (RLHF)

Fine-Tuning Insights: Lessons from Experimenting with RedPajama

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

How to Use Hugging Face AutoTrain to Fine-tune LLMs - KDnuggets

Flat Young Man Repair Finetune Gears Stock Vector (Royalty Free) 1327703738

:strip_icc()/red-white-valentine-cards-c40f60ed-bff3dfa773364a94bc94d47d510166ca.jpg) 29 Valentine's Day Crafts for Everyone You Love

29 Valentine's Day Crafts for Everyone You Love Novo Chevrolet Onix Sedan estreia com motor 1.0 turbo e custa R$ 57.600… na China - Motor Show

Novo Chevrolet Onix Sedan estreia com motor 1.0 turbo e custa R$ 57.600… na China - Motor Show Broqueles de Oro 10 K Plafer

Broqueles de Oro 10 K Plafer Levi's® 501® ORIGINAL - Denim shorts - athens mid short/blue denim

Levi's® 501® ORIGINAL - Denim shorts - athens mid short/blue denim Yoga at workplace: 5 exercises to de-stress at office and regain

Yoga at workplace: 5 exercises to de-stress at office and regain how to style mint pants Mint green pants outfit, Mint pants, Colored pants outfits

how to style mint pants Mint green pants outfit, Mint pants, Colored pants outfits