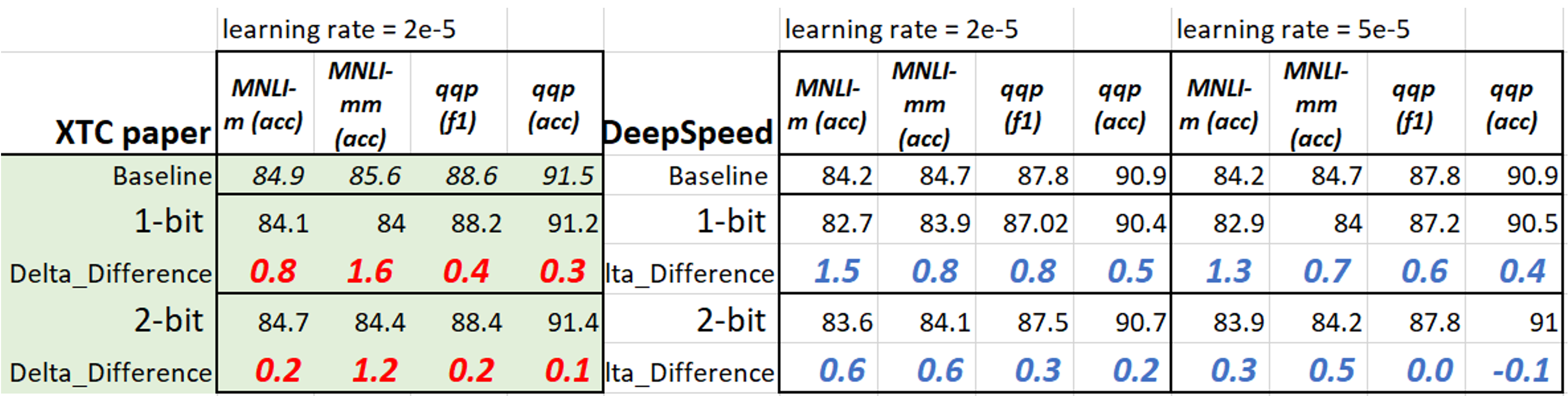

DeepSpeed Compression: A composable library for extreme

4.6 (220) In stock

Large-scale models are revolutionizing deep learning and AI research, driving major improvements in language understanding, generating creative texts, multi-lingual translation and many more. But despite their remarkable capabilities, the models’ large size creates latency and cost constraints that hinder the deployment of applications on top of them. In particular, increased inference time and memory consumption […]

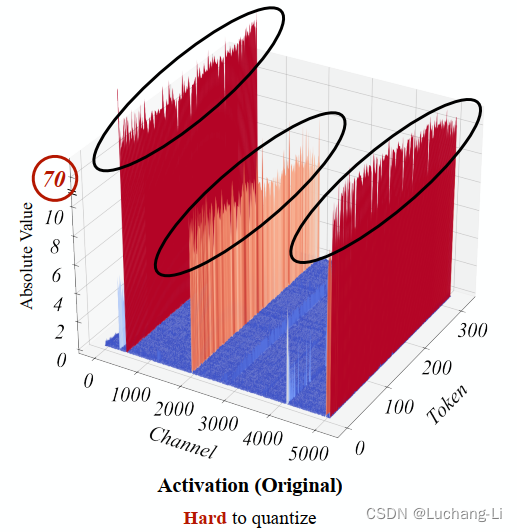

ZeroQuant与SmoothQuant量化总结-CSDN博客

Toward INT8 Inference: Deploying Quantization-Aware Trained Networks using TensorRT

DeepSpeed介绍- 知乎

Michel LAPLANE (@MichelLAPLANE) / X

Gioele Crispo on LinkedIn: GitHub - gioelecrispo/chunkipy: chunkipy is an extremely useful tool for…

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

ZeroQuant与SmoothQuant量化总结-CSDN博客

DeepSpeed Model Compression Library - DeepSpeed

This AI newsletter is all you need #6 – Towards AI

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

This AI newsletter is all you need #6 – Towards AI

Shaden Smith on LinkedIn: DeepSpeed Data Efficiency: A composable library that makes better use of…

Michel LAPLANE (@MichelLAPLANE) / X

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research

Normatec 3 Compression Boot Review

Deep Render Says Its AI Video Compression Tech Will 'Save the

Innova Digital Compression Tester 5612

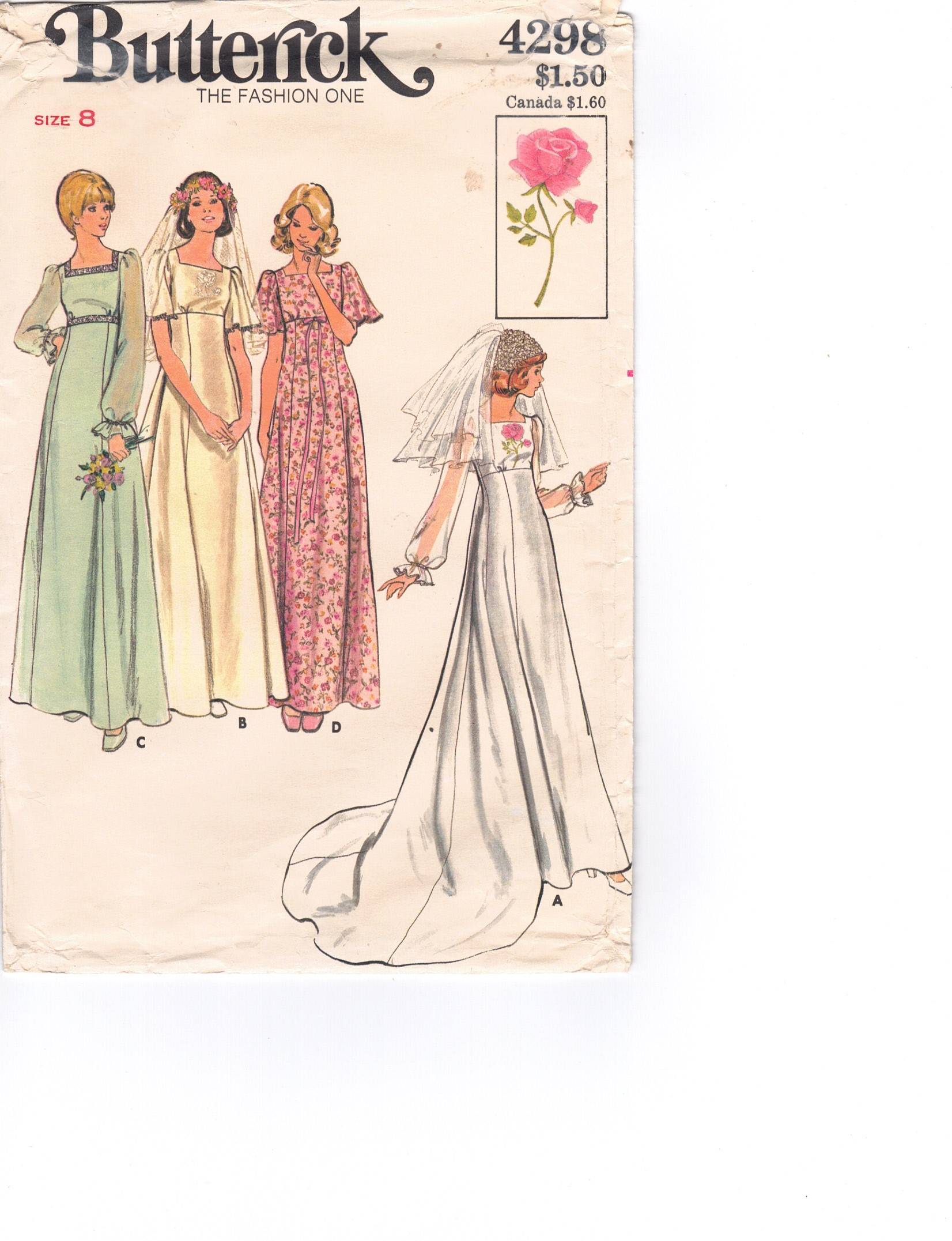

Butterick Pattern 4298 Wedding Gown, Bridesmaid and formal dresses

Butterick Pattern 4298 Wedding Gown, Bridesmaid and formal dresses Dabur Vatika Naturals Hair Cream, Natural Moisturizing Hair Cream for – RedBay Dental

Dabur Vatika Naturals Hair Cream, Natural Moisturizing Hair Cream for – RedBay Dental LOVERS CANDY EDIBLE Underwear Bra G String Pouch Nipple Tassles Ring Valentine £6.95 - PicClick UK

LOVERS CANDY EDIBLE Underwear Bra G String Pouch Nipple Tassles Ring Valentine £6.95 - PicClick UK This Beachside Goan Retreat Should Be On Every Yogi's Bucket List

This Beachside Goan Retreat Should Be On Every Yogi's Bucket List Signs Your Crush Feels Comfortable Around You

Signs Your Crush Feels Comfortable Around You Outdoor Mens Soft shell Camping Tactical Cargo Pants Combat Hiking Trousers UK

Outdoor Mens Soft shell Camping Tactical Cargo Pants Combat Hiking Trousers UK